SOURCE

SOURCEBy Tiffany Fox

Albert Einstein may have written his last scientific theory more than half a century ago, but he's still honing his emotional intelligence in a laboratory at the University of California, San Diego.

Albert Einstein may have written his last scientific theory more than half a century ago, but he's still honing his emotional intelligence in a laboratory at the University of California, San Diego.

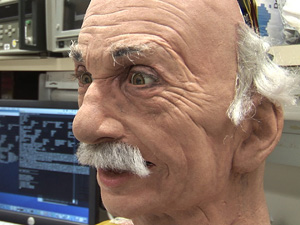

Scientists at UC San Diego's California Institute for Telecommunications and Information Technology (Calit2) have equipped a robot modeled after the famed theoretical physicist with specialized software that allows it to interact with humans in a relatively natural, conversational way. The so-called "Einstein Robot," which was designed by Hanson Robotics of Dallas, Texas, recognizes a number of human facial expressions and can respond accordingly, making it an unparalleled tool for understanding how both robots and humans perceive emotion, as well as a potential platform for teaching, entertainment, fine arts and even cognitive therapy.

"In the short-term, Einstein is being used to develop computer vision so we can see how computers perceive facial expressions and develop hardware to visually react," says Javier Movellan, a research scientist in the Calit2-based UCSD Machine Perception Laboratory (MPL). "This robot is a scientific instrument that we hope will tell us something about human-robot interaction, but also human-to-human interaction.

"When a robot interacts in a way we feel is human, we can't help but react. Developing a robot like this one teaches us how sensitive we are to biological movement and facial expressions, and when we get it right, it's really astonishing."

The Einstein Robot — a head-and-shoulders automaton complete with unruly white hair and bushy mustache — made its public debut at the Technology, Entertainment and Design (TED) conference in Long Beach last week. David Hanson, the robot's primary designer and owner of Hanson Robotics, amazed a crowd of 1,500 with Einstein's capacity to understand and mimic expressions. Several graduate students from the MPL accompanied Hanson to the conference, which was established to facilitate creative collaborations among scientists, entrepreneurs and designers.

"In the short-term, Einstein is being used to develop computer vision so we can see how computers perceive facial expressions and develop hardware to visually react," says Javier Movellan, a research scientist in the Calit2-based UCSD Machine Perception Laboratory (MPL). "This robot is a scientific instrument that we hope will tell us something about human-robot interaction, but also human-to-human interaction.

"When a robot interacts in a way we feel is human, we can't help but react. Developing a robot like this one teaches us how sensitive we are to biological movement and facial expressions, and when we get it right, it's really astonishing."

The Einstein Robot — a head-and-shoulders automaton complete with unruly white hair and bushy mustache — made its public debut at the Technology, Entertainment and Design (TED) conference in Long Beach last week. David Hanson, the robot's primary designer and owner of Hanson Robotics, amazed a crowd of 1,500 with Einstein's capacity to understand and mimic expressions. Several graduate students from the MPL accompanied Hanson to the conference, which was established to facilitate creative collaborations among scientists, entrepreneurs and designers.

Evoking realistic facial expressions in a machine made of wires and gears is no small feat, Hanson says. For Einstein to crack a smile, 17 of the robot's 31 motors must whir into action and subtly adjust multiple points of articulation around his mouth and piercing brown eyes. To express confusion, Einstein furrows his brow, but even that movement — which is second nature for most humans — is difficult to re-create in a robot. To achieve a realistic result, Hanson constructed Einstein's face from a patented, flesh-like material known as Frubber™, which he created after extensive research into facial anatomy, physiology, materials science and soft-bodied mechanical engineering. Hanson even went so far as to fashion the Frubber™ with realistic pores that measure in the macro-molecular scale at 4 to 40 nanometers — requiring him to take a crash course in nanotechnology.

"I know how the face needs to look when it deforms into a given expression, and I can see when an expression looks good," notes Hanson, a former Disney Imagineer. "But in addition to all these science and engineering studies, there's a certain magic of facial aesthetics that's beyond the scope of scientists. Artists understand it somehow, and are able to externalize facial movements and conversational interaction in external media like sculpture and film animation. However, this has not been successfully imported to robotics. Instead of sculpting it in marble, I have to get the Frubber™ material and the internal mechanisms to move into that expression on demand, and achieve that expression in the context of an interaction with a human."

The robot's internal facial recognition software is what provides that context. Developed by Movellan and a team of graduate students at Calit2, the software is based on a series of computational algorithms derived from an analysis of more than one million facial images. It allows Einstein to understand and respond to a number of "perceptual primitives," such as expressions of sadness, anger, fear, happiness and confusion, as well as facial cues suggesting age and gender (even whether the person interacting with the robot is wearing glasses). The robot's parallel facial action coding system can detect simple gestures like nods, and mimic those reactions.

"I know how the face needs to look when it deforms into a given expression, and I can see when an expression looks good," notes Hanson, a former Disney Imagineer. "But in addition to all these science and engineering studies, there's a certain magic of facial aesthetics that's beyond the scope of scientists. Artists understand it somehow, and are able to externalize facial movements and conversational interaction in external media like sculpture and film animation. However, this has not been successfully imported to robotics. Instead of sculpting it in marble, I have to get the Frubber™ material and the internal mechanisms to move into that expression on demand, and achieve that expression in the context of an interaction with a human."

The robot's internal facial recognition software is what provides that context. Developed by Movellan and a team of graduate students at Calit2, the software is based on a series of computational algorithms derived from an analysis of more than one million facial images. It allows Einstein to understand and respond to a number of "perceptual primitives," such as expressions of sadness, anger, fear, happiness and confusion, as well as facial cues suggesting age and gender (even whether the person interacting with the robot is wearing glasses). The robot's parallel facial action coding system can detect simple gestures like nods, and mimic those reactions.

Movellan, working with Jacobs School of Engineering computer science professor Yoav Freund, also succeeded in getting the robot to respond to audio cues such as clapping, which might prove helpful were Einstein to be used in an educational setting, for example. Movellan says he's hoping to have the robot's operational system fully integrated by June so that it can be deployed as a prototype robot teacher in a local high school, in much the same way that MPL's RUBI robot has been used to teach pre-schoolers.

Another important part of the robot's inner workings is its Character Engine Artificial Intelligence Control Software, which allows the programmer to author and define the persona of the character so it can hold a conversation.

"Einstein has pretty broad conversational abilities, although not like a human," Hanson notes. "In the demo mode, he might say something like, 'I'm an advanced perceptual robot bringing together many technologies into a whole that's greater than the sum of my parts, but here's what some of my parts can do. I can see your facial expressions and mimic them. I can see your age and gender. So why don't we demo some of these technologies?'"

During a demo, Einstein might turn his head, lock eyes with you, and then flash a dashing smile to mimic your own. But as loveable as the robot is, its developers have had to contend with a paradox familiar to all designers of humanoid robots: The more human-like the robot, the creepier it is to actual humans. And that's of crucial importance when one of the primary motivations behind the robot is to get humans to interact with it in a natural way.

"Some scientists believe strongly that very human-like robots are so inherently creepy that people can never get over it and interact with them normally," Hanson says, alluding to Japanese roboticist Masahiro Mori's "uncanny valley theory." Mori's hypothesis speculates that when robots look and act like actual humans, it creates a response of revulsion among human observers. "But these are some of the questions we're trying to address with the Einstein robot," explains Hanson. "Does software engage people more when you have a robot that's more aware of you? Are human-like robots inherently creepy, and if so is that a barrier, or is it not a barrier?

"We're trying to get past the novelty of the technology to a certain extent so that people can socially engage with the robots and get lost in that social engagement," he continues. "And in a sense, we naturally do that with other humans. If I have a big piece of spinach in my teeth or I have something cosmetically atypical about me, it might be difficult to get past those superficial barriers so that we can have a more meaningful conversation."

"As people get more comfortable with them, these robots are becoming more popular," adds Movellan (think Johnny Depp as the "Captain Jack Sparrow" automaton at Disneyland's "Pirates of the Caribbean"). "Although we're thinking of Einstein as a tool for science right now, in the future, I could see it being used in museums or as a way to teach people from other cultures how to interact with one another. You could, in principle, program the robot to interact in a more Japanese way, or a more Middle Eastern way. We're also exploring the use of the robot for children with autism. It could be used as a way to teach them facial expression recognition."

But for now, manufacturing robots like Einstein remains cost-prohibitive.

"This isn't yet a real manufacturing business — these robots are still being built by engineers, so they're still very expensive," Hanson cautions. "Right now it costs $50,000 and up for a robot with very few degrees of freedom; something full-featured like Einstein will cost $75,000 and up. But our aspiration and our core discoveries are targeting mass production and trying to get the robots made for under $200."

All applications and cost factors aside, Hanson and Movellan say their ultimate goal is to develop a creative, intelligent machine that rivals or exceeds a human level of intelligence — and perhaps most importantly — does so without compromising civilization and humanity.

"This is something on the order of an Apollo project or a Manhattan project or a Linux initiative," Hanson explains. "It requires a lot of people at a lot of institutions cooperating and competing with each other to find the best way of creating a complete mind for a robot. If things go really well, we're maybe 10 years away from that happening. But it's very important that we develop empathic machines, machines that have compassion, machines that understand what you're feeling. If these robots do become as intelligent as human beings, we want this infrastructure of compassion and empathy to be in place so the machines are prepared to use their intellectual powers for the good of civilization rather than in ways that undermine the stability of civilization. In a way, we're planting the seeds for the survival of humanity."

Media Contact: Tiffany Fox, 858-246-0353

Another important part of the robot's inner workings is its Character Engine Artificial Intelligence Control Software, which allows the programmer to author and define the persona of the character so it can hold a conversation.

"Einstein has pretty broad conversational abilities, although not like a human," Hanson notes. "In the demo mode, he might say something like, 'I'm an advanced perceptual robot bringing together many technologies into a whole that's greater than the sum of my parts, but here's what some of my parts can do. I can see your facial expressions and mimic them. I can see your age and gender. So why don't we demo some of these technologies?'"

During a demo, Einstein might turn his head, lock eyes with you, and then flash a dashing smile to mimic your own. But as loveable as the robot is, its developers have had to contend with a paradox familiar to all designers of humanoid robots: The more human-like the robot, the creepier it is to actual humans. And that's of crucial importance when one of the primary motivations behind the robot is to get humans to interact with it in a natural way.

"Some scientists believe strongly that very human-like robots are so inherently creepy that people can never get over it and interact with them normally," Hanson says, alluding to Japanese roboticist Masahiro Mori's "uncanny valley theory." Mori's hypothesis speculates that when robots look and act like actual humans, it creates a response of revulsion among human observers. "But these are some of the questions we're trying to address with the Einstein robot," explains Hanson. "Does software engage people more when you have a robot that's more aware of you? Are human-like robots inherently creepy, and if so is that a barrier, or is it not a barrier?

"We're trying to get past the novelty of the technology to a certain extent so that people can socially engage with the robots and get lost in that social engagement," he continues. "And in a sense, we naturally do that with other humans. If I have a big piece of spinach in my teeth or I have something cosmetically atypical about me, it might be difficult to get past those superficial barriers so that we can have a more meaningful conversation."

"As people get more comfortable with them, these robots are becoming more popular," adds Movellan (think Johnny Depp as the "Captain Jack Sparrow" automaton at Disneyland's "Pirates of the Caribbean"). "Although we're thinking of Einstein as a tool for science right now, in the future, I could see it being used in museums or as a way to teach people from other cultures how to interact with one another. You could, in principle, program the robot to interact in a more Japanese way, or a more Middle Eastern way. We're also exploring the use of the robot for children with autism. It could be used as a way to teach them facial expression recognition."

But for now, manufacturing robots like Einstein remains cost-prohibitive.

"This isn't yet a real manufacturing business — these robots are still being built by engineers, so they're still very expensive," Hanson cautions. "Right now it costs $50,000 and up for a robot with very few degrees of freedom; something full-featured like Einstein will cost $75,000 and up. But our aspiration and our core discoveries are targeting mass production and trying to get the robots made for under $200."

All applications and cost factors aside, Hanson and Movellan say their ultimate goal is to develop a creative, intelligent machine that rivals or exceeds a human level of intelligence — and perhaps most importantly — does so without compromising civilization and humanity.

"This is something on the order of an Apollo project or a Manhattan project or a Linux initiative," Hanson explains. "It requires a lot of people at a lot of institutions cooperating and competing with each other to find the best way of creating a complete mind for a robot. If things go really well, we're maybe 10 years away from that happening. But it's very important that we develop empathic machines, machines that have compassion, machines that understand what you're feeling. If these robots do become as intelligent as human beings, we want this infrastructure of compassion and empathy to be in place so the machines are prepared to use their intellectual powers for the good of civilization rather than in ways that undermine the stability of civilization. In a way, we're planting the seeds for the survival of humanity."

Media Contact: Tiffany Fox, 858-246-0353

1 comment:

"Great.....!!!! I don't know that Robots has Emotional Intelligence".

Robotics

Post a Comment